How to speed up Docker on Docker with an external SSD, without losing data

Summary: A quick guide on

(a) How to speed up Docker on Synology using a USB external SSD, and

(b) How not to lose data from doing this spectacularly ill-advised hack

[This is a hobby post. Really has nothing to do with UX.]

A while back I started using an older Synology NAS as a backup target for our Macs and my photo library. Last year, I replaced it with a newer, faster one – a two-bay Synology DS220+, loaded up with 18 GB of RAM. (The old one is now an off-site backup target for the primary NAS.)

The dual-core Intel Celeron makes a dandy platform for running Dockerized services. Having developed a healthy mistrust of Big Tech, I started rolling my own services to replace cloud services such as Google Drive, Dropbox, and 1Password, and entirely new-to-me tools such as Paperless-NGX, audiobookshelf, and ArchiveBox.

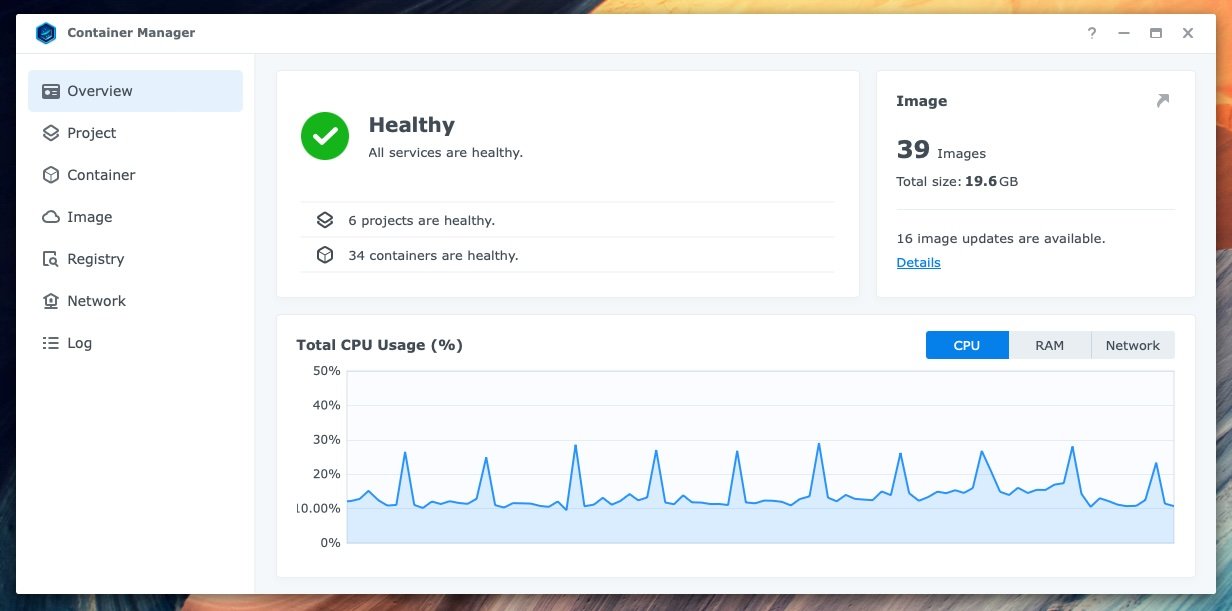

I have a container problem

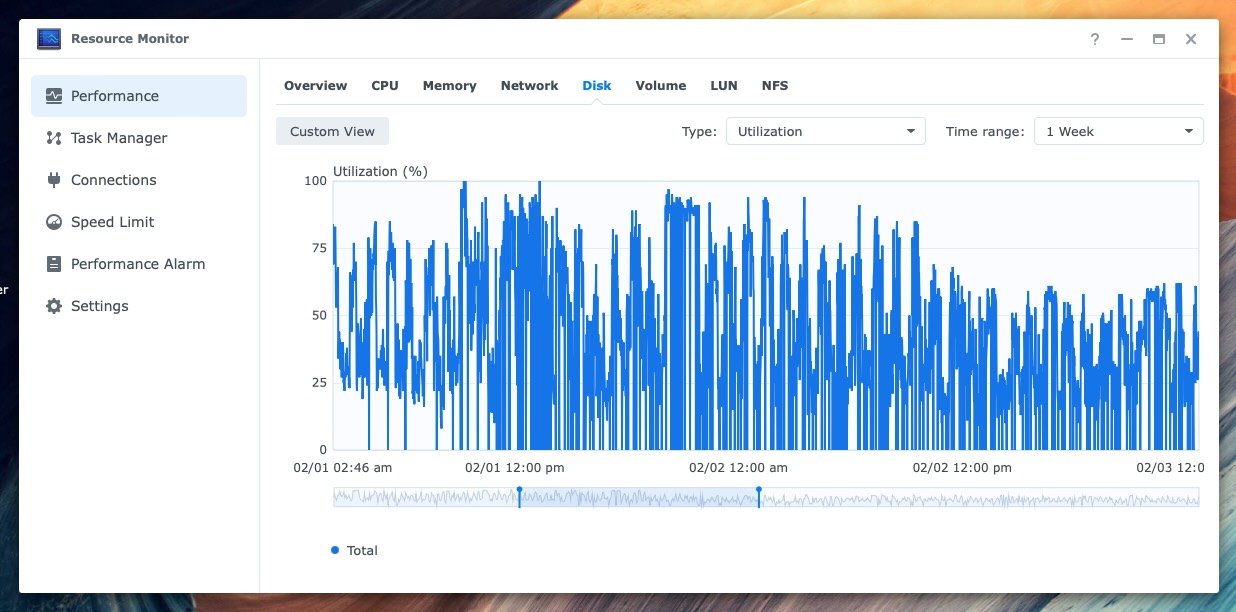

Great! But… the more services I add to this little box, the slower they get. IO contention has become a real problem. Disk activity rarely settles below ~25% for more than a few seconds:

Spinning disks are cheap, but they are very, very not fast

An easy solution is to move all that IO activity to an SSD with nice, low-latency IO. The 220+, however, has no extra drive bays, and no NVME slots like pricier siblings 720+ and 920+. Hmmm.

This week I have been experimenting with using USB SSD storage, and have moved a couple containers' persistent storage to it.

It’s easy to modify Docker compose files to point at storage on an external USB SSD. But – How do I put this simply?

Do not use a USB disk for services, it’s just a bad plan. Synology’s HyperBackup will not backup from USB devices, because you shouldn't use them as primary storage. Ever. You know: “Do as I say, not as I do.”

Thanks, Wikipedia Bit Rot entry!

-

USB devices are prone to falling off the bus

USB is error prone; you’ll never know a few bits were corrupted until you read it

Synology doesn’t support self-healing BTRFS for external volumes

Did I mention USB devices are prone to falling off the bus when they get cranky? Like when bus power fluctuates a little? USB is far better than it used to be, but it’s still terrible.

-

Yes, it’s possibly to modify Synology DSM’s config files to tell it to treat an external drive as an internal one. That’s an even worse idea, but if you really must: Synology SSD Cache on External Devices

So anyway, the services I moved to SSD are far, far, far more responsive! But now they are more vulnerable to data loss, and I also have no backup.

That’s no good.

Requirements

So here are the requirements:

Put the data somewhere HyperBackup can back it up

Make sure that data is not corrupted when you put it there

Make sure that data is up-to-date when it is backed up

I probably could have done this by messing around with symbolic links, but that’s pretty fragile. A better approach is copy the data to an internal storage volume. But we can’t risk copying while the services are running, because in-use files (e.g. databases) can’t be copied safely. And we should automate it to avoid manual errors.

Here’s what we need to do to meet those requirements.

Stop the Docker projects/containers

Copy the data, preserving permissions

Start the Docker projects/containers

Let HyperBackup do its thing with the copy we made

Automation is our friend

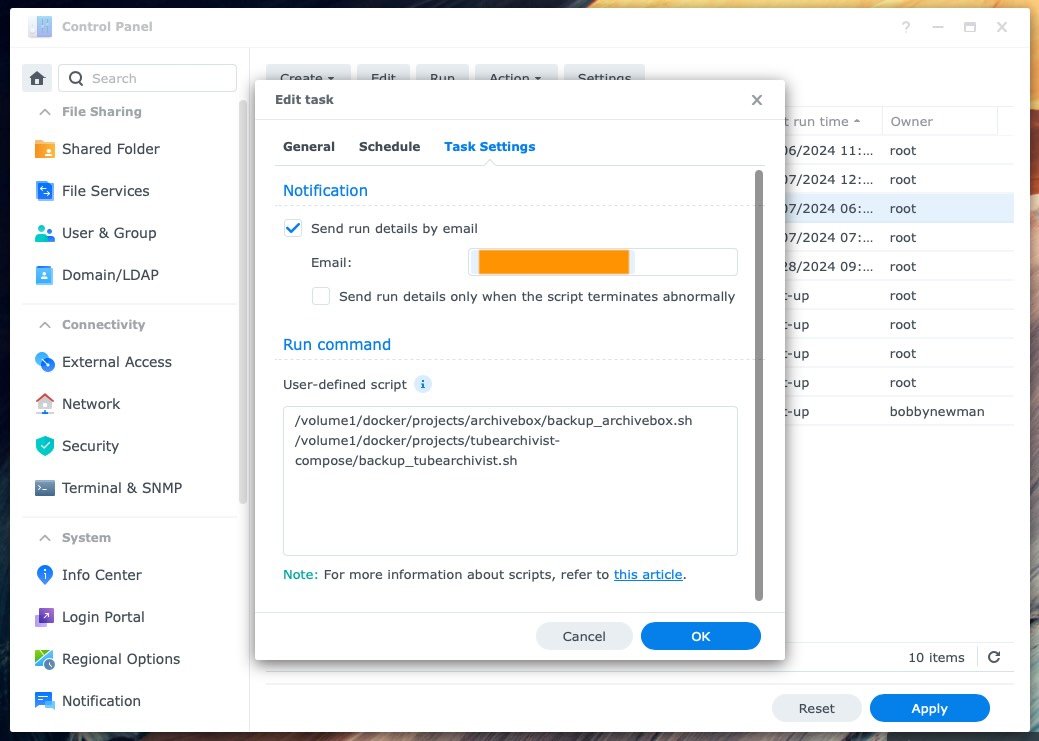

I wrote a little script to stop the docker projects, copy their persistent storage to built-in disks, then restart the projects. I have DSM Task Manager run this once a day. Here's what the script looks like right now:

🚨 Warning! 🚨 Don’t use this script as-is. It needs better error checking and I don't want you losing your data because of my work in progress. But it might be helpful as a jumping off point for your own scripting.

TODOs

I have a couple things I want to circle back to:

Add more error checking, so it doesn’t try to copy files if the Docker projects don’t terminate, and will report errors in copying, etc etc.

Generalize it so that one script can iterate through several projects. (Currently I have a separate script for each Docker project, which all but guarantees problems once I get past, say, two of them.)

Really, this script is kind of awful and I’m a little embarrassed to share it. But if that helps someone else prevent data loss, that’s worth a little embarassement.

And then schedule your script(s) to run regularly. .

PS: Don’t forget to test the backups to make sure you can restore from them.